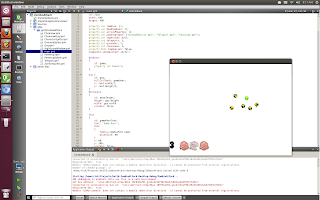

First, I want to extend my deepest apologies for how ugly this app is at the moment. It's embarrassing. Sorry. I will put lipstick on it later, I hope.

This app is for browsing technical news from

Feedzilla. It has 3 pages, A page for categories (well, subcategories because Tech is itself a category in Feedzill), a list of articles, and WebView to display an article.

|

| Main Category View |

|

| Article List View |

|

| Reading an Article in Embedded Webkit |

However, I did want to blog about part of how I built it, because I think it is generally useful for folks to see how I made my ListViews from JSON.

I blogged before about how I used an XmlListModel to make a ListView, and how easy that was. However, for this App, I wanted to use a JSON feed from feedzilla. However, there is no JsonListView. So what did I do? Here's how I made the main category view.

To get started, I created a ListView, as usual:

ListView

{

id: categoriesList

anchors.fill: parent;

}

It doesn't do anything yet, because there is no model set. Remember, a ListView's only purpose in life is to display data in a list format. The data that I want is available in JSON format, so, next I need to get the JSON and set the model of the ListView.

So, in onComplete method, I wrote some good old fashioned Ajaxy javascript.

Component.onCompleted:

{

//create a request and tell it where the json that I want is

var req = new XMLHttpRequest();

var location = "http://api.feedzilla.com/v1/categories/30/subcategories.json"

//tell the request to go ahead and get the json

req.open("GET", location, true);

req.send(null);

//wait until the readyState is 4, which means the json is ready

req.onreadystatechange = function()

{

if (req.readyState == 4)

{

//turn the text in a javascript object while setting the ListView's model to it

categoriesList.model = JSON.parse(req.responseText)

}

};

}

The key line is the one that sets the ListView's model to a javascript object:

categoriesList.model = JSON.parse(req.responseText)

This works because all of the javascript that is returned is a list. So, I fetched a list in Javascript Object Notation, parsed it, and used it as a model for a ListView. Easy, right?

So now that the ListView has a model, I can create a delegate. Remember, a delegate is a bit of code, like an anonymous method, that gets called for each element in the ListViews model, and creates a component for it. Whenever the model for the ListView changes, the delegate is called for each item in the model. Every time the delegate is called, it gets passed "index" which is the index of the current item being created. So the delegate uses that to get the correct element from the list of javascript objects.

ListView

{

id: categoriesList

anchors.fill: parent;

delegate: Button

{

width: parent.width

text: categoriesList.model[index]["display_subcategory_name"];

onClicked:

{

subCategorySelected(categoriesList.model[index]["subcategory_id"])

}

}

}

My ListView is just a row of buttons, and the text is defined as "display_subcategory_name" in the JSON that I got from the server. So I can index in and set the text like so:

text: categoriesList.model[index]["display_subcategory_name"];

Bonus snippet! The code that I wrote is a component that gets used in a PageStack. I don't want the PageStack to have to know about how the category list is implemented, of course, so I want my component to emit a signal when when of the categories is selected by the user (which is by clicking a button in this implementation).

Adding signals to a component is

really easy in QML. First, you declare the signal where you declare properties and such for your component:

signal subCategorySelected(int subCategoryId);

In this case, the signal has a single parameter. A signal can as many parameters as you want.

Then, the onClicked function for each button "fires" the signal by calling it like a function:

subCategorySelected(categoriesList.model[index]["subcategory_id"])

That means my PageStack can just listen for that signal:

onSubCategorySelected:

{

//do stuff with subCategoryId

}

That's all you have to know to simplify your code with custom signals!